The biological roots of AI research

2021.04.07

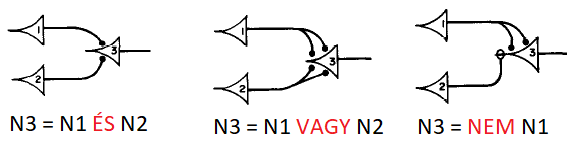

The ‘output’ of a neuron, the so-called axon, unlike the vast majority of biological systems operates not in an analogue mode but digitally: it either emits a signal or does not (see FALSE or TRUE, furthermore, 0 or 1 in logic and computer technology). The signal emitted by the cell is triggered by the set of signals arriving at the axons of the associated other neurons as ‘inputs’. If that reaches a threshold value, then it triggers a signal in the cell output, but if it does not reach the threshold, then it does not trigger a signal. A branched axon of a neuron can make a connection to several other neurons or connect to the next neuron with multiple nerve endings. In the latter case, the ‘weight’ of the connection will be greater. In the case of the following diagrams taken from the original paper, at least two input signals are needed to trigger an output signal, but a negative contact marked with a blank circle is able to block this.

According to the two authors, logical and computer technology neural networks can be assembled from such neuronal models, which with correct configuration can perform many types of logical operations similarly to the way our brain makes decisions.

In the years and decades since this paper was published, a start was made on studying – on this principle – the behaviour of neural networks comprising layers of simulated neurons that had huge numbers of connections between the layers. Initially, they worked with just one or two layers, but it turned out that more abstract problems could only be resolved using intermediate – so-called hidden – layers. These layers are not connected directly to the inputs and outputs of the entire neural network but instead just to the cells of the preceding and next layers.

However, this mathematical solution was only fully worked out a good few decades after the discovery of neural networks. Until then, something else had to be developed and AI researchers once again went back to biology, in this case to the theory of evolution, the algorithm of evolution.

- are capable of reproduction,

- descendants inherit their characteristics,

- these characteristics can, randomly, be modified to a small extent, and

- the characteristics influence their reproductive success,

In the case of neural networks, the connections, structure and parameters of the network represent the characteristics, and adjustment means how well the network performs in recognition and classification. They started from a virtually randomly parameterized neural network (object), and then from it they made a large number of copies (reproduction) on the computer in such a way that in the meantime a few randomly selected parameters of the ‘descendant’ networks were changed randomly (mutations). These new networks were tested with teaching patterns and poor performers were rejected (selection). A slightly imperfect copy was made again from a few of the best, and so on. Through such highly automatizable random and absolutely not random processes (mutation and selection), after a sufficient number of generations the neural networks had changed in a way that they performed the task to be learned to a high standard. For example, they recognized the licence plates of cars driving into a garage with suitable accuracy even when there was not sufficient illumination, it was raining or the licence plate was dirty. The success of the evolution algorithm was in itself an important result in the teaching of neural networks but at the same time it was confirmation towards biology that, after all, the natural selection process as proposed by Charles Darwin and Alfred Russel Wallace back in the middle of the 19th century, and since then proven time and again, is capable of establishing astoundingly complex and well operating systems even in non-biological systems.

In the next part of the series I will cover what AI has to say about the errors, distortions of the human mind, our bad habits and our addictions. Because it is as if certain AI solutions are starting to display these characteristics themselves, which is perhaps not so surprising given the biological heritage.

Hraskó Gábor

Head of CCS Division, INNObyte

More articles

INNObyte is more than just a workplace – interview with Iván Balatonyi, head of the T&M department

2021.08.16

Covid 2020

2020.03.16

INNObyte photo competition

2020.10.02